History of computers

One of the first devices (5th-4th centuries BC), from which the history of the development of computers can be considered, was a special board, later called "abacus". Calculations on it were carried out by moving bones or stones in the recesses of boards made of bronze, stone, ivory and the like. In Greece, the abacus existed already in the 5th century. BC, among the Japanese it was called "serobayan", among the Chinese - "suanpan". In ancient Russia, a device similar to an abacus was used for counting - a “board count”. In the 17th century, this device took the form of familiar Russian accounts.

Abacus (V-IV centuries BC)

The French mathematician and philosopher Blaise Pascal in 1642 created the first machine, which received the name Pascaline in honor of its creator. A mechanical device in the form of a box with many gears, in addition to addition, also performed subtraction. Data was entered into the machine by turning dials that corresponded to numbers from 0 to 9. The answer appeared at the top of the metal case.

Pascalina

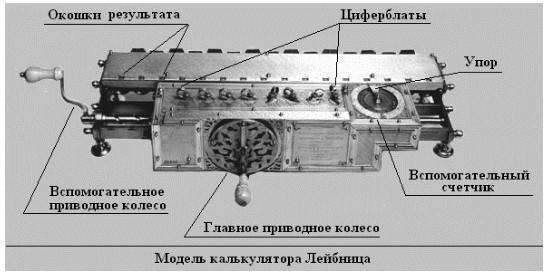

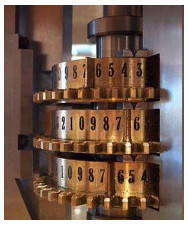

Pascalina In 1673, Gottfried Wilhelm Leibniz created a mechanical calculating device (Leibniz step calculator - Leibniz calculator), which for the first time not only added and subtracted, but also multiplied, divided and calculated the square root. Subsequently, the Leibniz wheel became the prototype for mass calculating devices - adding machines.

Leibniz step calculator model

Leibniz step calculator model English mathematician Charles Babbage developed a device that not only performed arithmetic operations, but also immediately printed the results. In 1832, a ten-fold reduced model was built from two thousand brass parts, which weighed three tons, but was able to perform arithmetic operations with an accuracy of six decimal places and calculate second-order derivatives. This computer became the prototype of real computers, it was called a differential machine.

differential machine

differential machine The summing apparatus with continuous transmission of tens is created by the Russian mathematician and mechanic Pafnuty Lvovich Chebyshev. This device has achieved automation of all arithmetic operations. In 1881, a prefix was created for a adding apparatus for multiplying and dividing. The principle of continuous transmission of tens has been widely used in various counters and computers.

Chebyshev summing apparatus

Chebyshev summing apparatus Automated data processing appeared at the end of the last century in the United States. Herman Hollerith created a device - Hollerith's Tabulator - in which, applied to punched cards, it was deciphered by electric current.

Hollerith tabulator

Hollerith tabulator In 1936, a young scientist from Cambridge, Alan Turing, came up with a mental calculating machine-computer that existed only on paper. His "smart machine" acted according to a certain predetermined algorithm. Depending on the algorithm, the imaginary machine could be used for a wide variety of purposes. However, at that time these were purely theoretical considerations and schemes that served as a prototype of a programmable computer, as a computing device that processes data in accordance with a certain sequence of commands.

Information revolutions in history

In the history of the development of civilization, there have been several information revolutions - transformations of social social relations due to changes in the processing, storage and transmission of information.

First the revolution is associated with the invention of writing, which led to a gigantic qualitative and quantitative leap of civilization. It became possible to transfer knowledge from generations to generations.

Second(mid-16th century) the revolution was caused by the invention of printing, which radically changed industrial society, culture, and the organization of activities.

Third(end of the 19th century) a revolution with discoveries in the field of electricity, thanks to which the telegraph, telephone, radio, and devices appeared that allow you to quickly transfer and accumulate information in any volume.

Fourth(since the seventies of the XX century) the revolution is associated with the invention of microprocessor technology and the advent of the personal computer. Computers, data transmission systems (information communications) are created on microprocessors and integrated circuits.

This period is characterized by three fundamental innovations:

- transition from mechanical and electrical means of information conversion to electronic ones;

- miniaturization of all nodes, devices, devices, machines;

- creation of software-controlled devices and processes.

History of the development of computer technology

The need for storing, converting and transmitting information in humans appeared much earlier than the telegraph apparatus, the first telephone exchange and an electronic computer (ECM) were created. In fact, all the experience, all the knowledge accumulated by mankind, one way or another, contributed to the emergence of computer technology. The history of the creation of computers - the general name of electronic machines for performing calculations - begins far in the past and is associated with the development of almost all aspects of human life and activity. As long as human civilization has existed, a certain automation of calculations has been used for so long.

The history of the development of computer technology has about five decades. During this time, several generations of computers have changed. Each subsequent generation was distinguished by new elements (electronic tubes, transistors, integrated circuits), the manufacturing technology of which was fundamentally different. Currently, there is a generally accepted classification of computer generations:

- First generation (1946 - early 50s). Element base - electronic lamps. Computers were distinguished by large dimensions, high energy consumption, low speed, low reliability, programming in codes.

- Second generation (late 50s - early 60s). Element base - semiconductor. Almost all technical characteristics have improved in comparison with the computers of the previous generation. Algorithmic languages are used for programming.

- 3rd generation (late 60s - late 70s). Element base - integrated circuits, multilayer printed wiring. A sharp decrease in the dimensions of computers, an increase in their reliability, an increase in productivity. Access from remote terminals.

- The fourth generation (from the mid-70s to the end of the 80s). Element base - microprocessors, large integrated circuits. Improved specifications. Mass production of personal computers. Directions of development: powerful multiprocessor computing systems with high performance, creation of cheap microcomputers.

- Fifth generation (since the mid-80s). The development of intelligent computers began, which has not yet been crowned with success. Introduction to all areas of computer networks and their association, the use of distributed data processing, the widespread use of computer information technologies.

Along with the change of generations of computers, the nature of their use also changed. If at first they were created and used mainly for solving computational problems, then later the scope of their application expanded. This includes information processing, automation of management of production, technological and scientific processes, and much more.

How Computers Work by Konrad Zuse

The idea of the possibility of building an automated calculating apparatus came to the mind of the German engineer Konrad Zuse, and in 1934 Zuse formulated the basic principles on which future computers should work:

- binary number system;

- the use of devices operating on the principle of "yes / no" (logical 1 / 0);

- fully automated operation of the calculator;

- software control of the computing process;

- support for floating point arithmetic;

- use of large capacity memory.

Zuse was the first in the world to determine that data processing begins with a bit (he called the bit "yes / no status", and the formulas of binary algebra - conditional propositions), the first to introduce the term "machine word" (Word), the first to combine arithmetic and logical calculators operations, noting that “the elementary operation of a computer is to check two binary numbers for equality. The result will also be a binary number with two values (equal, not equal).

First generation - computers with vacuum tubes

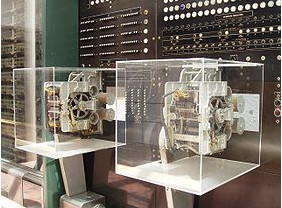

Colossus I - the first computer on lamps, created by the British in 1943, to decode German military ciphers; it consisted of 1800 vacuum tubes - information storage devices - and was one of the first programmable electronic digital computers.

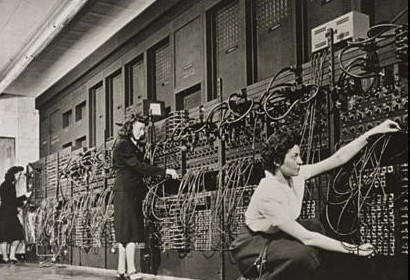

ENIAC - was created to calculate artillery ballistics tables; this computer weighed 30 tons, occupied 1000 square feet and consumed 130-140 kW of electricity. The computer contained 17468 vacuum tubes of sixteen types, 7200 crystal diodes and 4100 magnetic elements, and they were contained in cabinets with a total volume of about 100 m 3 . ENIAC had a performance of 5000 operations per second. The total cost of the machine was $750,000. The electricity requirement was 174 kW, and the total space occupied was 300 m2.

ENIAC - a device for calculating artillery ballistics tables

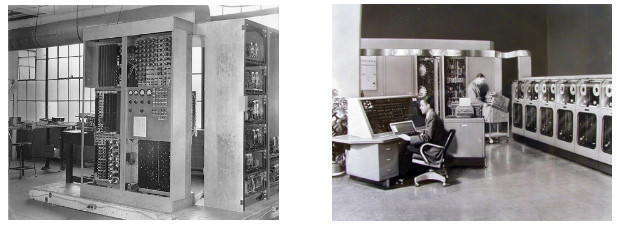

ENIAC - a device for calculating artillery ballistics tables Another representative of the 1st generation of computers that you should pay attention to is EDVAC (Electronic Discrete Variable Computer). EDVAC is interesting in that it attempted to record programs electronically in so-called "ultrasonic delay lines" using mercury tubes. In 126 such lines it was possible to store 1024 lines of four-digit binary numbers. It was "fast" memory. As a "slow" memory, it was supposed to fix numbers and commands on a magnetic wire, but this method turned out to be unreliable, and teletype tapes had to be returned to. The EDVAC was faster than its predecessor, adding in 1 µs and dividing in 3 µs. It contained only 3.5 thousand electron tubes and was located on 13 m 2 of area.

UNIVAC (Universal Automatic Computer) was an electronic device with programs stored in memory, which were entered there no longer from punched cards, but using a magnetic tape; this provided a high speed of reading and writing information, and, consequently, a higher speed of the machine as a whole. One tape could contain a million characters written in binary form. Tapes could store both programs and intermediate data.

Representatives of the 1st generation of computers: 1) Electronic Discrete Variable Computer; 2) Universal Automatic Computer

Representatives of the 1st generation of computers: 1) Electronic Discrete Variable Computer; 2) Universal Automatic Computer The second generation is a computer on transistors.

Transistors replaced vacuum tubes in the early 1960s. Transistors (which act like electrical switches) consume less electricity and generate less heat, and take up less space. Combining several transistor circuits on one board gives an integrated circuit (chip - “chip”, “chip” literally, a plate). Transistors are binary counters. These details fix two states - the presence of current and the absence of current, and thereby process the information presented to them in this binary form.

In 1953, William Shockley invented the p-n junction transistor. The transistor replaces the vacuum tube and at the same time operates at a higher speed, generates very little heat and consumes almost no electricity. Simultaneously with the process of replacing electron tubes with transistors, information storage methods were improved: as memory devices, magnetic cores and magnetic drums began to be used, and already in the 60s information storage on disks became widespread.

One of the first transistorized computers, the Atlas Guidance Computer, was launched in 1957 and was used to control the launch of the Atlas rocket.

Introduced in 1957, the RAMAC was a low-cost computer with modular external memory on disks, combined magnetic core random access memory and drums. Although this computer was not yet completely transistorized, it was highly operable and easy to maintain and was in great demand in the office automation market. Therefore, a “large” RAMAC (IBM-305) was urgently released for corporate customers; to accommodate 5 MB of data, the RAMAC system needed 50 disks with a diameter of 24 inches. The information system created on the basis of this model smoothly processed arrays of requests in 10 languages.

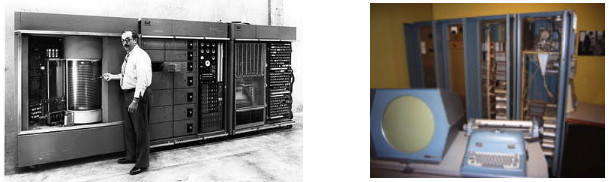

In 1959, IBM created its first all-transistorized large mainframe computer, the 7090, capable of 229,000 operations per second—a true transistorized mainframe. In 1964, based on two 7090 mainframes, the American airline SABER for the first time used an automated system for selling and booking airline tickets in 65 cities around the world.

In 1960, DEC introduced the world's first minicomputer, the PDP-1 (Programmed Data Processor), a computer with a monitor and keyboard, which became one of the most notable items on the market. This computer was capable of performing 100,000 operations per second. The machine itself occupied only 1.5 m 2 on the floor. The PDP-1 became, in fact, the world's first gaming platform thanks to MIT student Steve Russell, who wrote a Star War computer toy for it!

Representatives of the second generation of computers: 1) RAMAC; 2) PDP-1

Representatives of the second generation of computers: 1) RAMAC; 2) PDP-1 In 1968, Digital began mass production of minicomputers for the first time - it was the PDP-8: their price was about $ 10,000, and the model was the size of a refrigerator. It was this PDP-8 model that laboratories, universities and small businesses were able to buy.

Domestic computers of that time can be characterized as follows: in terms of architectural, circuit and functional solutions, they corresponded to their time, but their capabilities were limited due to the imperfection of the production and element base. The machines of the BESM series were the most popular. Serial production, rather insignificant, began with the release of the Ural-2 computer (1958), BESM-2, Minsk-1 and Ural-3 (all in 1959). In 1960, they went into the M-20 and Ural-4 series. At the end of 1960, the M-20 had the maximum performance (4500 lamps, 35 thousand semiconductor diodes, memory for 4096 cells) - 20 thousand operations per second. The first computers based on semiconductor elements (Razdan-2, Minsk-2, M-220 and Dnepr) were still under development.

Third generation - small-sized computers on integrated circuits

In the 1950s and 60s, assembling electronic equipment was a labor-intensive process that was slowed down by the increasing complexity of electronic circuits. For example, a CD1604 computer (1960, Control Data Corp.) contained about 100,000 diodes and 25,000 transistors.

In 1959, Americans Jack St. Clair Kilby (Texas Instruments) and Robert N. Noyce (Fairchild Semiconductor) independently invented the integrated circuit (IC), a collection of thousands of transistors placed on a single silicon chip inside a microcircuit.

The production of computers on ICs (they were called microcircuits later) was much cheaper than on transistors. Thanks to this, many organizations were able to acquire and master such machines. And this, in turn, led to an increase in demand for universal computers designed to solve various problems. During these years, the production of computers acquired an industrial scale.

At the same time, semiconductor memory appeared, which is still used in personal computers to this day.

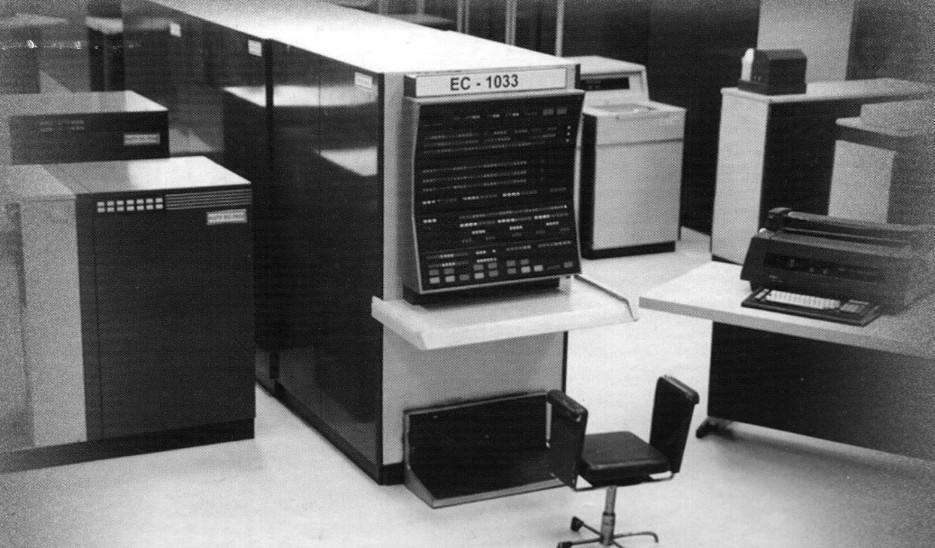

Representative of the third generation of computers - ES-1022

Representative of the third generation of computers - ES-1022 Fourth generation - personal computers on processors

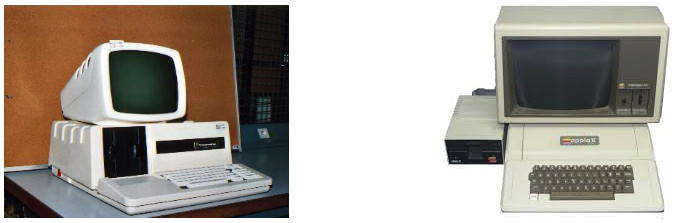

The forerunners of the IBM PC were the Apple II, Radio Shack TRS-80, Atari 400 and 800, Commodore 64 and Commodore PET.

The birth of personal computers (PC, PC) is rightfully associated with Intel processors. The corporation was founded in mid-June 1968. Since then, Intel has become the world's largest manufacturer of microprocessors with over 64,000 employees. Intel's goal was to create semiconductor memory, and in order to survive, the company began to take third-party orders for the development of semiconductor devices.

In 1971, Intel received an order to develop a set of 12 chips for programmable calculators, but the creation of 12 specialized chips seemed cumbersome and inefficient to Intel engineers. The task of reducing the range of microcircuits was solved by creating a “twin” from semiconductor memory and an actuator capable of working on commands stored in it. It was a breakthrough in computing philosophy: a universal logic device in the form of a 4-bit central processing unit i4004, which was later called the first microprocessor. It was a set of 4 chips, including one chip controlled by commands that were stored in an internal semiconductor memory.

As a commercial development, a microcomputer (as the microcircuit was then called) appeared on the market on November 11, 1971 under the name 4004: 4 bit, containing 2300 transistors, clock frequency 60 kHz, cost - $ 200. In 1972, Intel released an eight-bit microprocessor 8008, and in 1974 - its improved version Intel-8080, which by the end of the 70s became the standard for the microcomputer industry. Already in 1973, the first computer based on the 8080 processor, Micral, appeared in France. For various reasons, this processor was not successful in America (in the Soviet Union it was copied and produced for a long time under the name 580VM80). At the same time, a group of engineers left Intel and formed Zilog. Its loudest product is the Z80, which has an extended 8080 command set and, which made it a commercial success for home appliances, made do with a single 5V supply. On its basis, in particular, the ZX-Spectrum computer was created (sometimes it is called by the name of the creator - Sinclair), which practically became the prototype of the Home PC of the mid-80s. In 1981, Intel released the 16-bit processor 8086 and 8088, an analogue of the 8086, except for the external 8-bit data bus (all peripherals were still 8-bit at that time).

Intel's competitor, the Apple II computer, differed in that it was not a completely finished device and there was some freedom for refinement directly by the user - it was possible to install additional interface boards, memory boards, etc. It was this feature, which later became known as "open architecture", became its main advantage. Two more innovations, developed in 1978, contributed to the success of the Apple II. An inexpensive floppy disk drive and the first commercial calculation program, the VisiCalc spreadsheet.

The Altair-8800 computer, built on the basis of the Intel-8080 processor, was very popular in the 70s. Although the Altair's capabilities were rather limited - the RAM was only 4 Kb, the keyboard and screen were absent, its appearance was greeted with great enthusiasm. It was released to the market in 1975 and several thousand sets of the machine were sold in the first months.

Representatives of the 4th generation of computers: a) Micral; b) Apple II

Representatives of the 4th generation of computers: a) Micral; b) Apple II This computer, designed by MITS, was sold by mail order as a DIY kit. The entire build kit cost $397, while only one processor from Intel sold for $360.

The spread of the PC by the end of the 70s led to a slight decrease in demand for main computers and minicomputers - IBM released the IBM PC based on the 8088 processor in 1979. The software that existed in the early 80s was focused on word processing and simple electronic tables, and the very idea that a "microcomputer" could become a familiar and necessary device at work and at home seemed incredible.

On August 12, 1981, IBM introduced the Personal Computer (PC), which, in combination with software from Microsoft, became the standard for the entire PC fleet of the modern world. The price of the IBM PC model with a monochrome display was about $3,000, with a color one - $6,000. IBM PC configuration: Intel 8088 processor with a frequency of 4.77 MHz and 29 thousand transistors, 64 KB of RAM, 1 floppy drive with a capacity of 160 KB, - a conventional built-in speaker. At this time, launching and working with applications was a real pain: due to the lack of a hard drive, you had to change floppy disks all the time, there was no mouse, no graphical windowed user interface, no exact correspondence between the image on the screen and the final result (WYSIWYG ). Color graphics were extremely primitive, there was no question of three-dimensional animation or photo processing, but the history of the development of personal computers began with this model.

In 1984, IBM introduced two more innovations. First, a model for home users called the 8088-based PCjr was released, which was equipped with what was probably the first wireless keyboard, but this model did not succeed in the market.

The second novelty is the IBM PC AT. The most important feature: the transition to higher-level microprocessors (80286 with 80287 digital coprocessor) while maintaining compatibility with previous models. This computer proved to be a trendsetter for many years to come in a number of respects: it was the first to introduce a 16-bit expansion bus (which remains standard to this day) and EGA graphics adapters with a resolution of 640x350 at a color depth of 16 bits.

1984 saw the release of the first Macintosh computers with a graphical interface, a mouse, and many other user interface attributes that modern desktop computers cannot be without. Users of the new interface did not leave indifferent, but the revolutionary computer was not compatible with either the previous programs or hardware components. And in the corporations of that time, WordPerfect and Lotus 1-2-3 had already become normal working tools. Users have already become accustomed to and adapted to the symbolic DOS interface. From their point of view, the Macintosh even looked somehow frivolous.

Fifth generation of computers (from 1985 to our time)

Distinctive features of the 5th generation:

- New production technologies.

- Rejection of traditional programming languages such as Cobol and Fortran in favor of languages with enhanced character manipulation and elements of logic programming (Prolog and Lisp).

- Emphasis on new architectures (for example, data flow architecture).

- New user-friendly input/output methods (e.g. speech and image recognition, speech synthesis, natural language message processing)

- Artificial intelligence (that is, automation of the processes of solving problems, obtaining conclusions, manipulating knowledge)

It was at the turn of the 80-90s that the Windows-Intel alliance was formed. When Intel released the 486 microprocessor in early 1989, computer manufacturers didn't wait for an example from IBM or Compaq. A race began, in which dozens of firms entered. But all the new computers were extremely similar to each other - they were united by compatibility with Windows and processors from Intel.

In 1989, the i486 processor was released. It had a built-in math coprocessor, a pipeline, and a built-in first-level cache.

Directions for the development of computers

Neurocomputers can be attributed to the sixth generation of computers. Despite the fact that the actual use of neural networks began relatively recently, neurocomputing as a scientific direction has entered its seventh decade, and the first neurocomputer was built in 1958. The developer of the machine was Frank Rosenblatt, who gave his brainchild the name Mark I.

The theory of neural networks was first identified in the work of McCulloch and Pitts in 1943: any arithmetic or logical function can be implemented using a simple neural network. Interest in neurocomputing flared up again in the early 80s and was fueled by new work with multilayer perceptrons and parallel computing.

Neurocomputers are PCs consisting of many simple computing elements working in parallel, which are called neurons. Neurons form so-called neural networks. The high speed of neurocomputers is achieved precisely due to the huge number of neurons. Neurocomputers are built according to the biological principle: the human nervous system consists of individual cells - neurons, the number of which in the brain reaches 10 12, despite the fact that the response time of a neuron is 3 ms. Each neuron performs fairly simple functions, but since it is connected on average with 1-10 thousand other neurons, such a team successfully ensures the functioning of the human brain.

Representative of the VIth generation of computers - Mark I

Representative of the VIth generation of computers - Mark I In optoelectronic computers, the information carrier is the luminous flux. Electrical signals are converted to optical and vice versa. Optical radiation as an information carrier has a number of potential advantages over electrical signals:

- Light streams, unlike electrical ones, can intersect with each other;

- Light fluxes can be localized in the transverse direction of nanometer dimensions and transmitted through free space;

- The interaction of light fluxes with non-linear media is distributed throughout the entire environment, which gives new degrees of freedom in organizing communication and creating parallel architectures.

Currently, developments are underway to create computers entirely consisting of optical information processing devices. Today this direction is the most interesting.

An optical computer has unprecedented performance and a completely different architecture than an electronic computer: for 1 clock cycle of less than 1 nanosecond (this corresponds to a clock frequency of more than 1000 MHz), an optical computer can process a data array of about 1 megabyte or more. To date, individual components of optical computers have already been created and optimized.

An optical computer the size of a laptop can give the user the ability to place in it almost all the information about the world, while the computer can solve problems of any complexity.

Biological computers are ordinary PCs, only based on DNA computing. There are so few really demonstrative works in this area that it is not necessary to talk about significant results.

Molecular computers are PCs, the principle of which is based on the use of changes in the properties of molecules in the process of photosynthesis. In the process of photosynthesis, the molecule assumes different states, so that scientists can only assign certain logical values to each state, that is, "0" or "1". Using certain molecules, scientists have determined that their photocycle consists of only two states, which can be “switched” by changing the acid-base balance of the environment. The latter is very easy to do with an electrical signal. Modern technologies already make it possible to create entire chains of molecules organized in this way. Thus, it is very possible that molecular computers are waiting for us “just around the corner”.

The history of the development of computers is not over yet, in addition to improving the old ones, there is also the development of completely new technologies. An example of this is quantum computers - devices that operate on the basis of quantum mechanics. A full-scale quantum computer is a hypothetical device, the possibility of building which is associated with the serious development of quantum theory in the field of many particles and complex experiments; this work lies at the forefront of modern physics. Experimental quantum computers already exist; elements of quantum computers can be used to increase the efficiency of calculations on an existing instrument base.